DNN training and inference are composed of similar basic operators but with fundamentally different requirements. The former is throughput bound and relies on high precision floating point arithmetic for convergence while the latter is latency-bound and tolerant to low-precision arithmetic. Nevertheless, both workloads exhibit high computational demands and can benefit from using hardware accelerators.

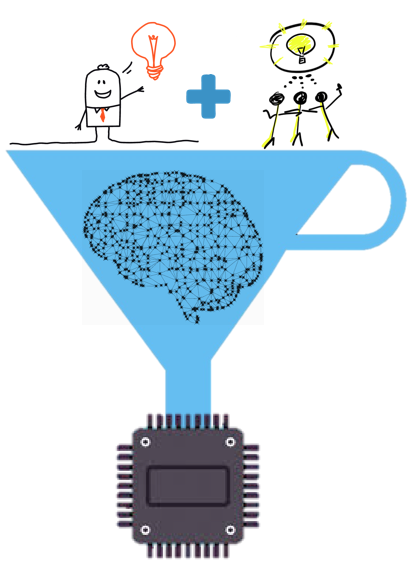

Unfortunately, the disparity in resource requirements forces datacenter operators to choose between deploying custom accelerators for training and inference or using training accelerators for inference. These options are both suboptimal: the former results in datacenter heterogeneity, increasing management costs while the latter results in inefficient inference. Furthermore, dedicated inference accelerators face load fluctuations, leading to overprovisioning and, ultimately, low average utilisation. ColTraIn proposes co-locating training and inference in datacenter accelerators. Our ultimate goal is to restore datacenter homogeneity without sacrificing either inference efficiency or quality of service (QoS) guarantees. The two key challenges to overcome are: (1) the difference in the arithmetic representation used in these workloads, (2) and the scheduling of training tasks in inference-bound accelerators.

We addressed the first challenge with Hybrid Block Floating Point (HBFP), that trains DNNs with dense, fixed-point-like arithmetic for the vast majority of operations without sacrificing accuracy, paving the way for effective co-location.

We are working on the second challenge, developing a co-locating accelerator. Our design adds training capabilities to an inference accelerator and pairs it with a scheduler that takes both resource utilisation and tasks' QoS constraints into account to co-locate DNN training and inference.

Sponsors